一、pycharm中运行/调试torch分布式训练

整体比较简单,可以参考:我下面的二、pycharm中运行/调试deepspeed分布式训练

关键步骤为:

软链接distributed文件

通过对调用分布式的命令分析,我们首先需要找到torch.distributed.launch这个文件,并将它软链接到我们的Pycharm项目目录下。为什么使用软链接而不是直接复制呢?因为软链接不会变更文件的路径,从而使得launch.py文件可以不做任何改动的情况下去import它需要的包。

在Ubuntu中,通过以下命令创建软链接

ln -s /yourpython/lib/python3.6/site-packages/torch/distributed/ /yourprogram/

以上命令没有直接链接launch.py而是它的父目录distributed,是因为这样比较容易知道launch.py是一个软链接,不与项目中的其他文件混淆。

设置Pycharm运行参数

打开Pycharm,依次点击Run->Edit Configurations 进入参数配置界面

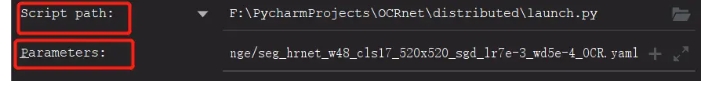

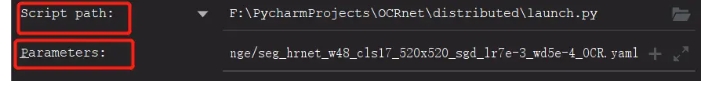

只需要配置Script path为launch.py路径;Parameters为launch.py运行参数,参考命令行调用的方法,设置如下。

--nproc_per_node=4

tools/train.py --cfg xxx.yaml

通过以上步骤就可以在Pycharm中运行分布式训练了。不过,如果是在调试模型最好还是修改一下trian.py文件,通过单GPU方式调试,并不是说分布式模式不能调试,仅仅是因为在单GPU方式下,对于数据流更好把控,减少调试时间

二、pycharm中运行/调试deepspeed分布式训练

1.pycharm版本

我用的是2020.1

2.环境

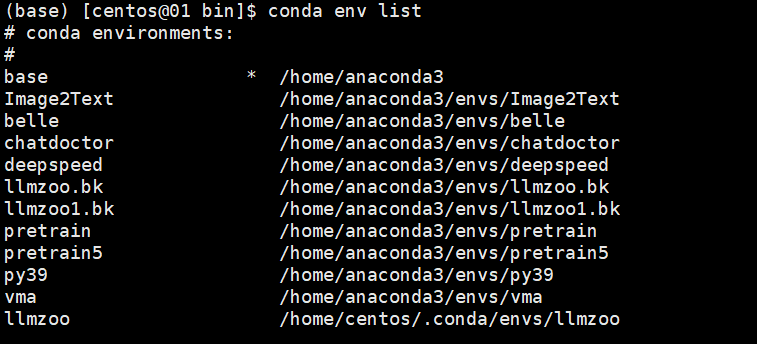

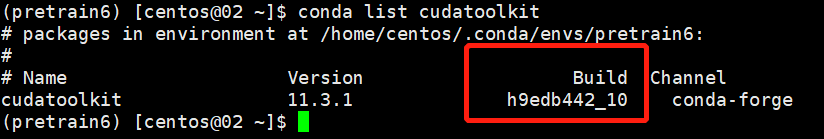

(1)首先服务器上需要配好对应的环境,并且保留代码一份,以及对应的模型,用conda配好虚拟环境

(2)本地只需要拷贝对应的代码和数据,不用拷贝模型

(3)代码,我主要是复现大语言模型的预训练,https://github.com/hiyouga/LLaMA-Factory,其他代码同理

最主要的启动脚本,其他代码也是同理,主要是使用deepspeed启动就行。

pretarin.sh

deepspeed --master_port=9901 src/train_bash.py \

--deepspeed ./ds_config.json \

--stage pt \

--do_train \

--model_name_or_path ../Yi-34B \

--dataset input_test \

--finetuning_type full \

--lora_target q_proj,v_proj \

--output_dir Yi-34B_output_test \

--overwrite_cache \

--per_device_train_batch_size 4 \

--gradient_accumulation_steps 4 \

--lr_scheduler_type cosine \

--logging_steps 5 \

--save_steps 300 \

--learning_rate 5e-5 \

--num_train_epochs 1.0 \

--preprocessing_num_workers 20 \

--plot_loss \

--bf16

3.本地配置:deepspeed安装

将虚拟环境的deepspeed安装包压缩,拷贝到本地。

我服务器虚拟环境的位置:/home/centos/anaconda3/envs/factory/lib/python3.10/site-packages/deepspeed/,

将deepspeed包压缩为zip包,拷贝到本地项目目录:D:codeLLaMA-Factory 然后解压,D:codeLLaMA-Factorydeepspeed

4.远程配置:软链接

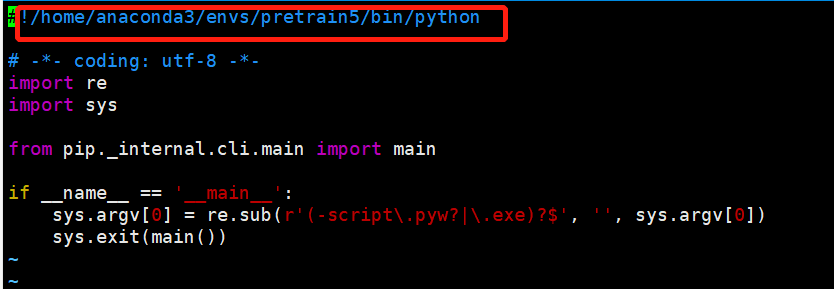

查看:vim /home/centos/anaconda3/envs/factory/bin/deepspeed 可以知道实际使用的hideepspeed.launcher.runner

文件,

通过对调用分布式的命令分析,我们首先需要找到deepspeed.launcher.runner这个文件,并将它软链接到我们的Pycharm项目目录下。为什么使用软链接而不是直接复制呢?因为软链接不会变更文件的路径,从而使得runner.py文件可以不做任何改动的情况下去import它需要的包。

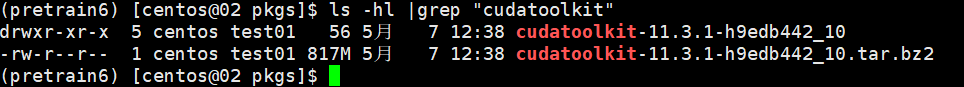

在centos中,通过以下命令创建软链接

ln -s /home/centos/anaconda3/envs/factory/lib/python3.10/site-packages/deepspeed/ /data/liulei/cpt/LLaMA-Factory/

如果要删除软连接用:

unlink /data/liulei/cpt/LLaMA-Factorydeepspeed/

5.pycharm配置

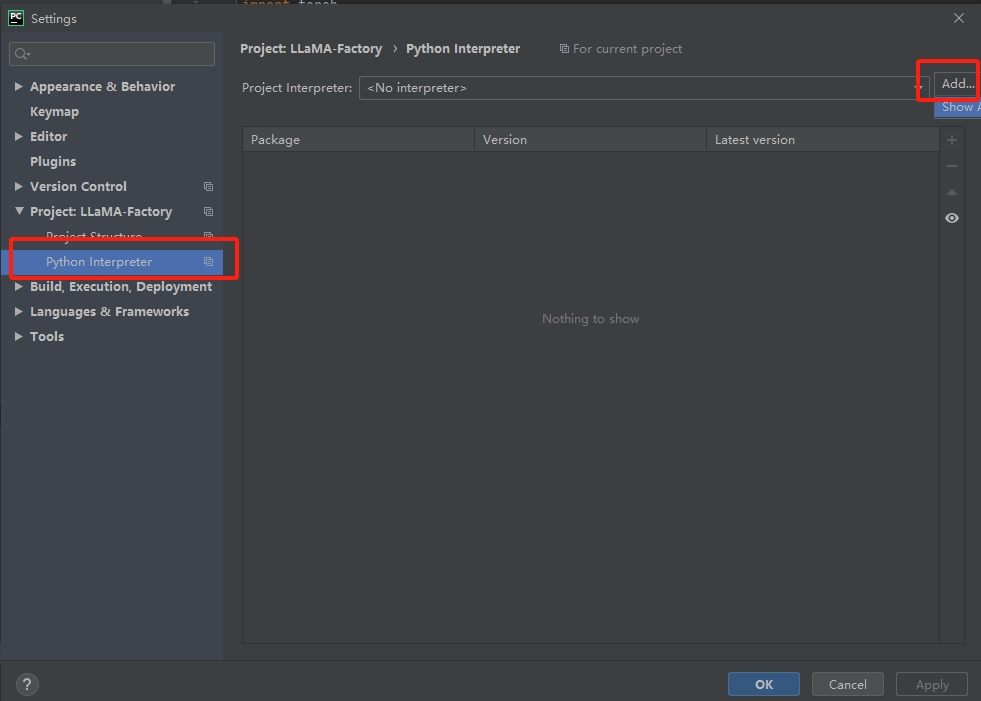

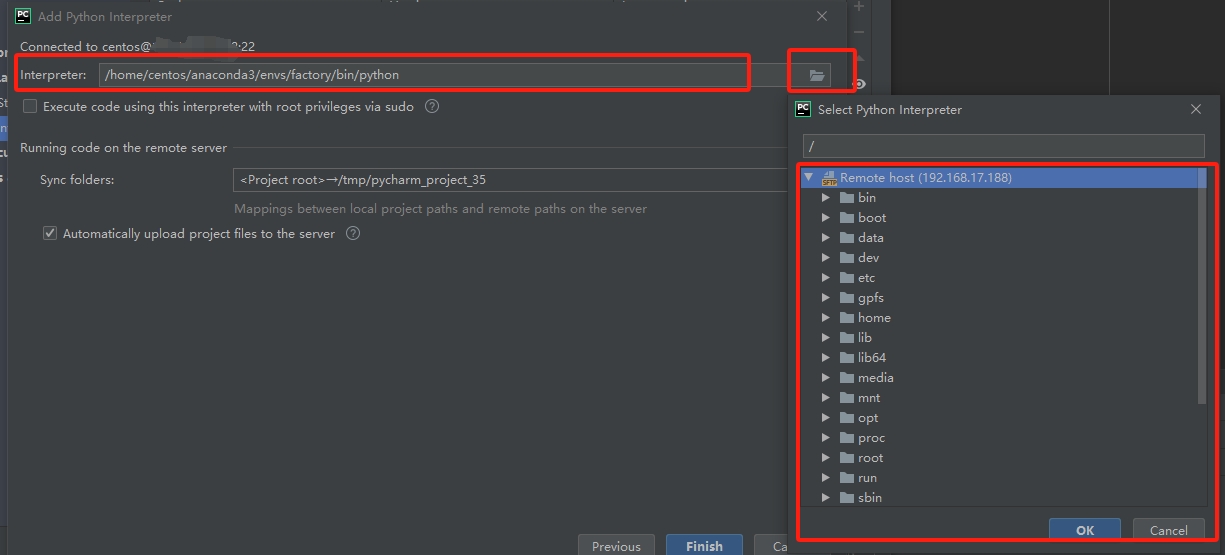

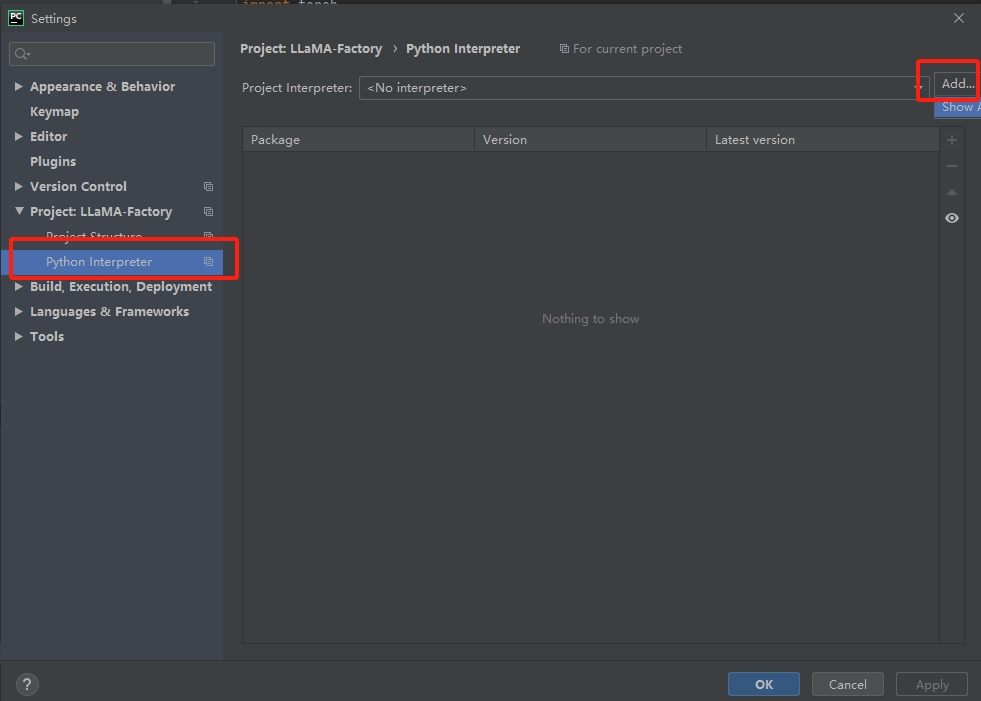

配置本地代码用远程服务器的python解析器:

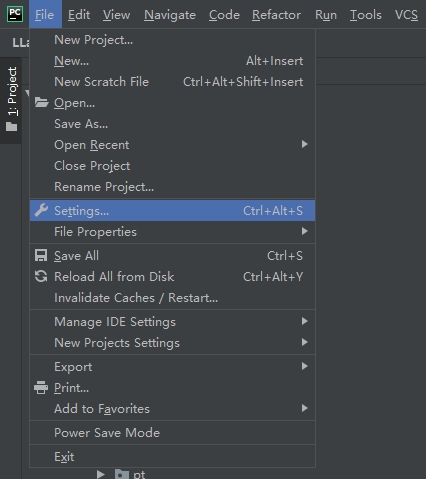

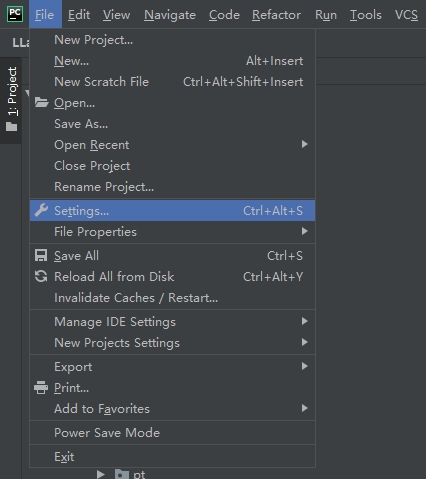

(1)从set里面进去

(2)add新的解析器

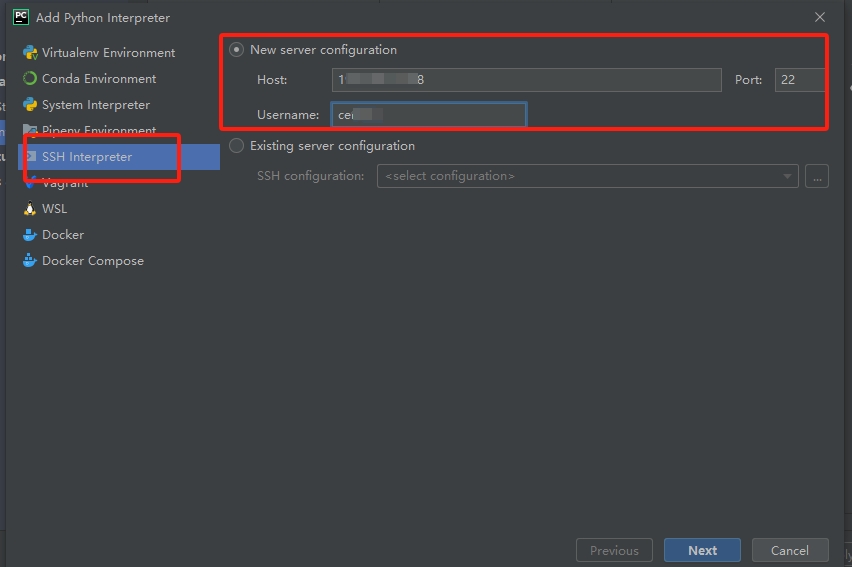

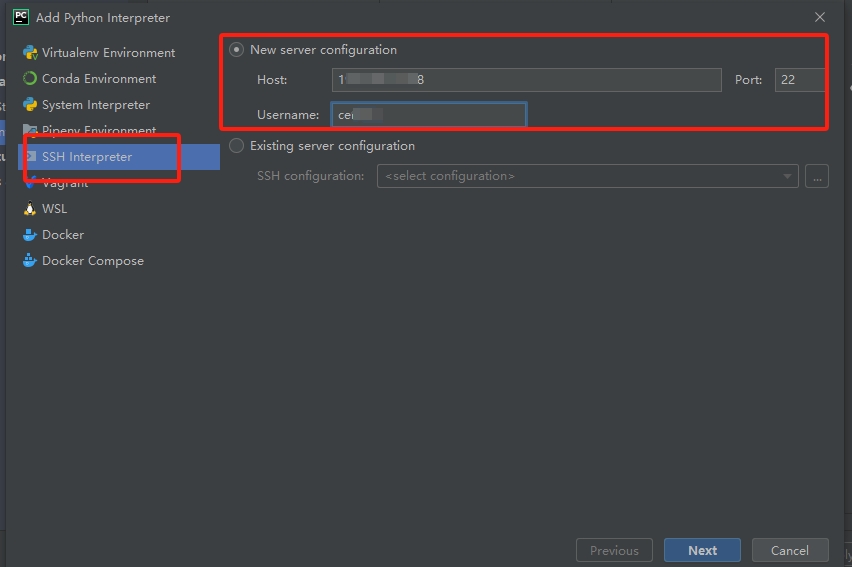

(3)通过ssh 添加远程的,写Ip和用户名,后面输入密码

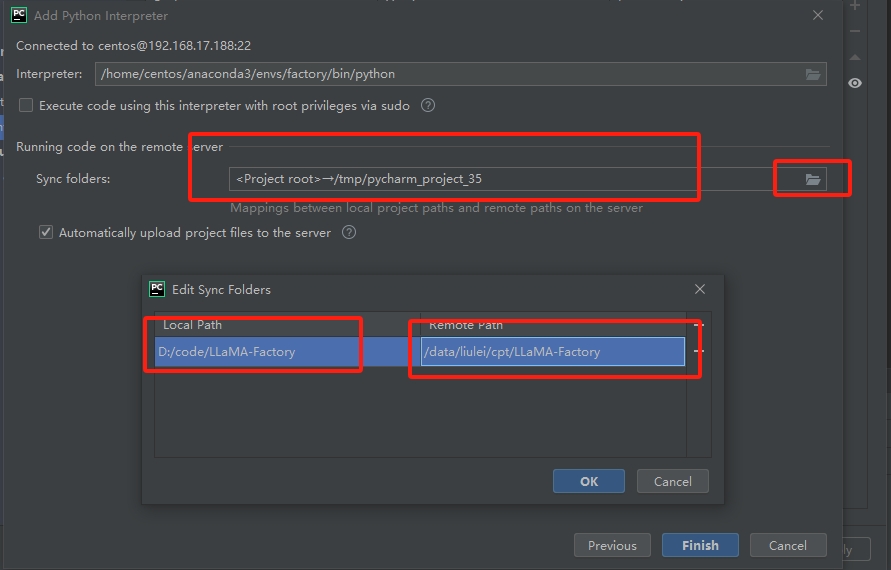

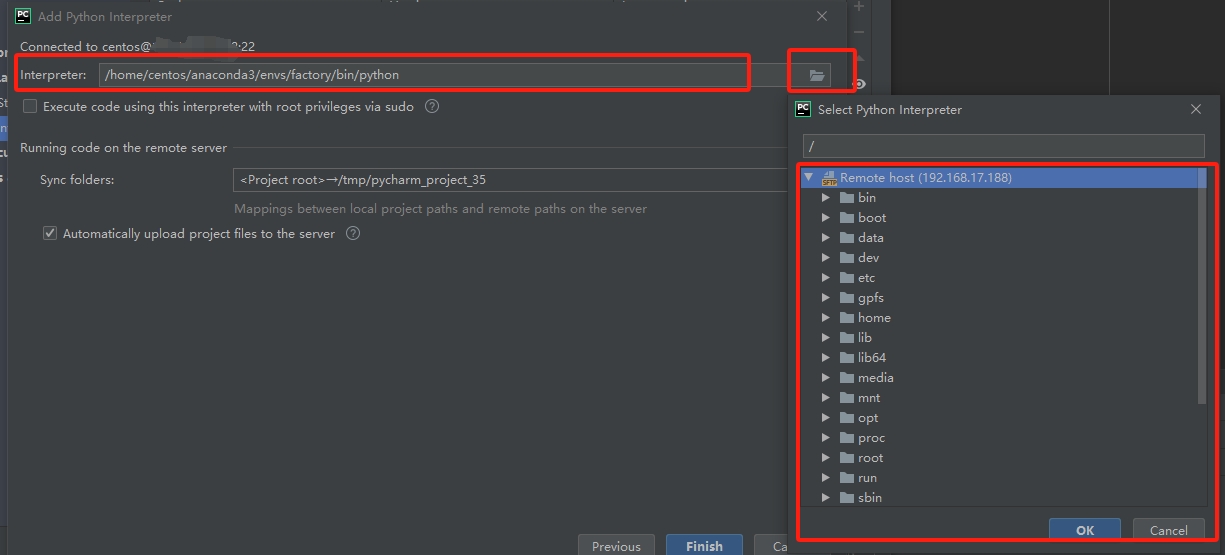

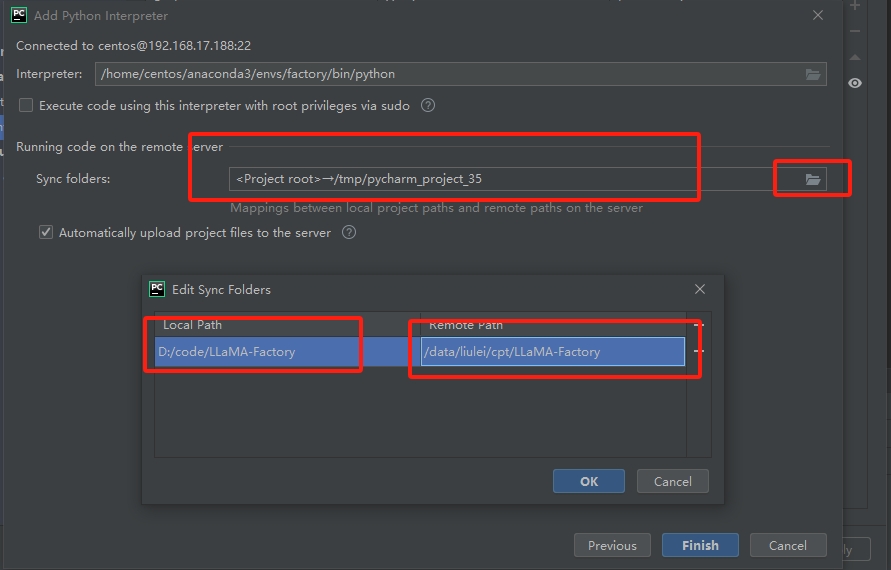

(4)从远程服务器中选择解析器、远程服务器代码和本地代码进行映射

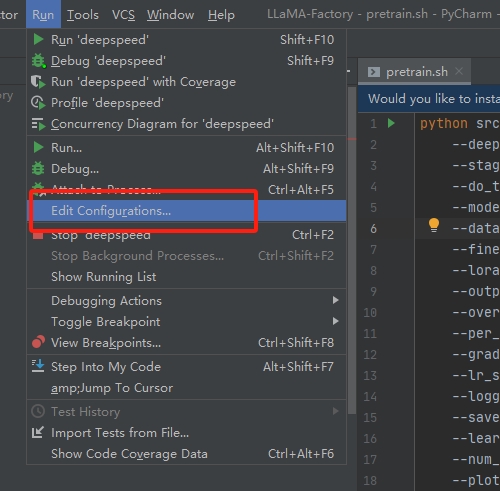

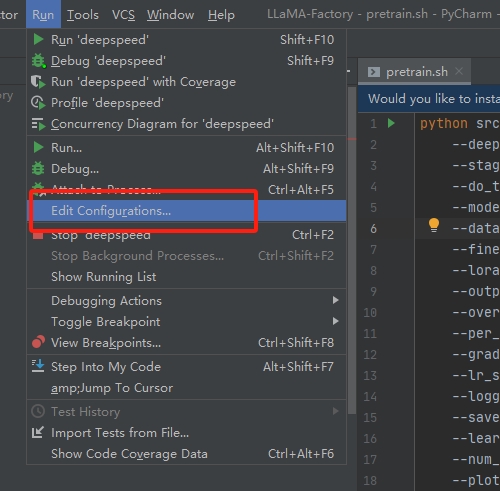

(5)配置debug执行入口命令

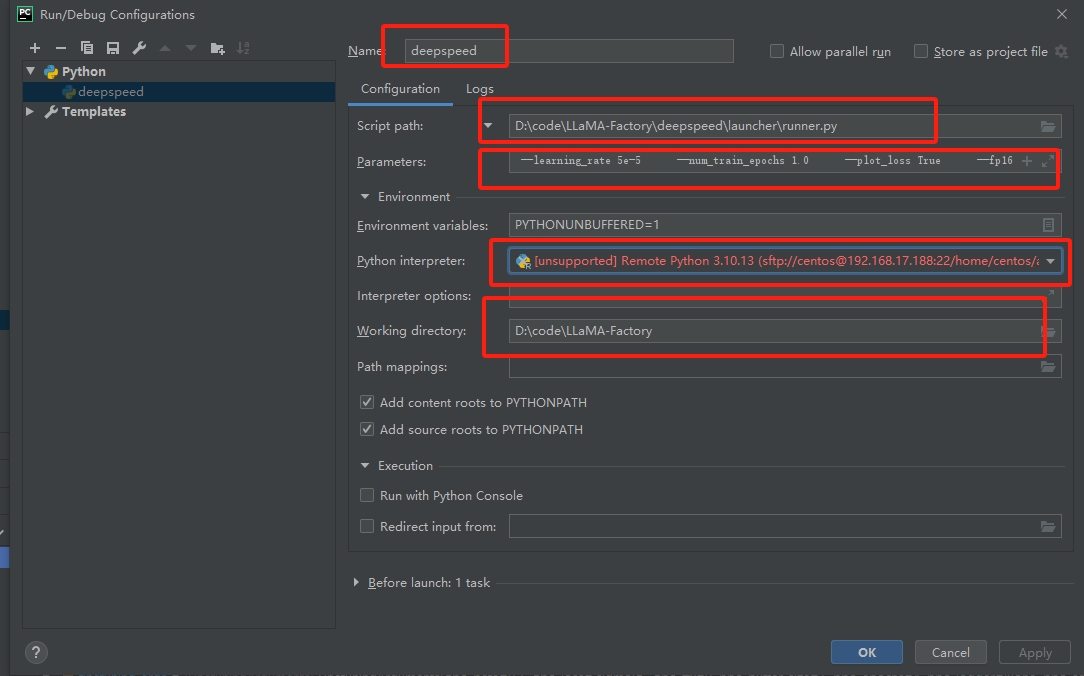

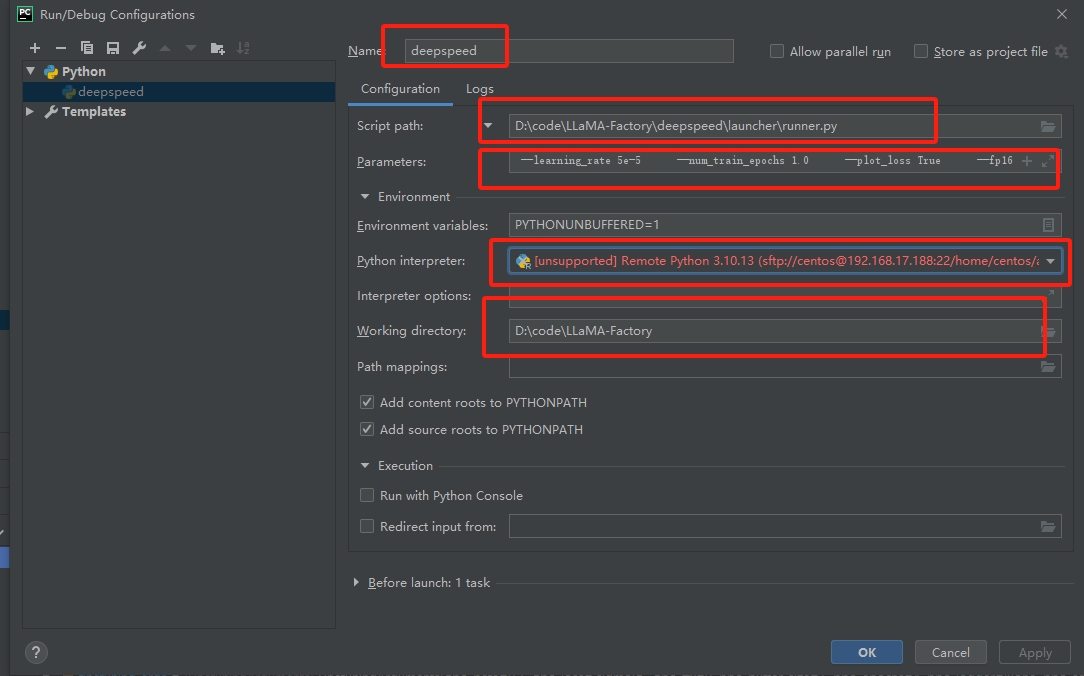

(6)脚本、参数、python解析器、代码路径配置

入口脚本:D:codeLLaMA-Factorydeepspeedlauncherrunner.py

参数:注意这里面的脚步,就是上面的pretrain.sh启动脚本,但是需要把deepspeed命令去掉,并且把反斜杠去掉,且要把默认参数True 补充完整。

--master_port=9901 src/train_bash.py --deepspeed ./ds_config.json --stage pt --do_train True --model_name_or_path ../Yi-34B-Chat --dataset input_test --finetuning_type full --lora_target q_proj,v_proj --output_dir path_to_pt_checkp1 --overwrite_cache True --per_device_train_batch_size 1 --gradient_accumulation_steps 1 --lr_scheduler_type cosine --logging_steps 1 --save_steps 100 --learning_rate 5e-5 --num_train_epochs 1.0 --plot_loss True --fp16

完成就可以进行debug了,然后你就发现在实际运行的时候还是会报错的。需要几个地方的代码修改。

(7)本地对应代码修改

问题:1

ssh://centos@18:22/home/centos/anaconda3/envs/factory/bin/python -u /home/centos/.pycharm_helpers/pydev/pydevd.py --multiproc --qt-support=auto --client 0.0.0.0 --port 34567 --file /data/liulei/cpt/LLaMA-Factory/deepspeed/launcher/runner.py --master_port=9901 src/train_bash.py --deepspeed ./ds_config.json --stage pt --do_train True --model_name_or_path ../Yi-34B-Chat --dataset input_test --finetuning_type full --lora_target q_proj,v_proj --output_dir path_to_pt_checkp1 --overwrite_cache True --per_device_train_batch_size 1 --gradient_accumulation_steps 1 --lr_scheduler_type cosine --logging_steps 1 --save_steps 100 --learning_rate 5e-5 --num_train_epochs 1.0 --plot_loss True --fp16

/home/centos/.pycharm_helpers/pydev/pydevd.py:1806: DeprecationWarning: currentThread() is deprecated, use current_thread() instead

dummy_thread = threading.currentThread()

pydev debugger: process 232478 is connecting

Connected to pydev debugger (build 201.6668.115)

Traceback (most recent call last):

File "/home/centos/.pycharm_helpers/pydev/pydevd.py", line 1438, in _exec

pydev_imports.execfile(file, globals, locals) # execute the script

File "/home/centos/.pycharm_helpers/pydev/_pydev_imps/_pydev_execfile.py", line 18, in execfile

exec(compile(contents+"\n", file, 'exec'), glob, loc)

File "/data/liulei/cpt/LLaMA-Factory/deepspeed/launcher/runner.py", line 24, in <module>

from .multinode_runner import PDSHRunner, OpenMPIRunner, MVAPICHRunner, SlurmRunner, MPICHRunner, IMPIRunner

ImportError: attempted relative import with no known parent package

解决办法:

将远程文件/data/liulei/cpt/LLaMA-Factory/deepspeed/launcher/runner.py 映射的本地文件D:\code\LLaMA-Factory\deepspeed\launcher\runner.py进行修改

修改前:

from .multinode_runner import PDSHRunner, OpenMPIRunner, MVAPICHRunner, SlurmRunner, MPICHRunner, IMPIRunner

from .constants import PDSH_LAUNCHER, OPENMPI_LAUNCHER, MVAPICH_LAUNCHER, SLURM_LAUNCHER, MPICH_LAUNCHER, IMPI_LAUNCHER

from ..constants import TORCH_DISTRIBUTED_DEFAULT_PORT

from ..nebula.constants import NEBULA_EXPORT_ENVS

from ..utils import logger

from ..autotuning import Autotuner

from deepspeed.accelerator import get_accelerator

修改后:

from deepspeed.launcher.multinode_runner import PDSHRunner, OpenMPIRunner, MVAPICHRunner, SlurmRunner, MPICHRunner, IMPIRunner

from deepspeed.launcher.constants import PDSH_LAUNCHER, OPENMPI_LAUNCHER, MVAPICH_LAUNCHER, SLURM_LAUNCHER, MPICH_LAUNCHER, IMPI_LAUNCHER

from deepspeed.constants import TORCH_DISTRIBUTED_DEFAULT_PORT

from deepspeed.nebula.constants import NEBULA_EXPORT_ENVS

from deepspeed.utils import logger

from deepspeed.autotuning import Autotuner

然后重新运行debug,就发现本地可以愉快的跑起来了。