transformer源码详解

更多机器学习、深度学习、NLP知识请参考我的个人网站: http://www.kexue.love

点我

一、背景介绍

NLP在AI中的地位可谓是举足轻重,如果把NLP领域比作江湖的话,deep learning这个门派已经把传统的统计机器学习门派吊打的体无完肤,自从Deep Learning统领江湖以后,这个门派逐渐划分为CNN,RNN为核心的两大派系,鄙人认为在这两大派系之争中RNN通过快速的发展出来LSTM->GRU等牛逼人物绝技,更压过CNN一头。时间来到的2017年的6月份,正当RNN派系自以为可以一统江湖夺得武林至尊之际,一个足以撼动武林的无上心法(Transformer)悄然诞生,一场腥风血雨拉开了序幕。时间来到了2018年10月11日,transformer派系的bert教主通过光明顶11战11胜的压倒性优势,一举夺得武林至尊的称号,从此堪称九阳神功的无上心法在江湖上广为流传。时间来到了2020的6月份,虽然transformer派系的教主之位多经易主,但是transformer的无上心法依旧经久不衰。今天我们来窥视一下transformer的心法口诀。

原文连接和翻译:

https://www.yiyibooks.cn/yiyibooks/Attention_Is_All_You_Need/index.html

transformer目前来说可以分为以下几个版本。

tensorflow版本:https://github.com/Kyubyong/transformer

pytorch版本:

tensor2tensor版本:https://github.com/tensorflow/tensor2tensor

今天我们主要研究transformer最原始的版本tensorflow版本。

二、目录

(1)文件夹

|| ---iwslt2016 训练数据和预测数据

||---log 模型结果

||---eval 验证数据

||---test 测试数据

||---fig 图片:bleu loss lr

(2)数据处理

||---download.sh 下载训练数据的脚本

||---hparams.py 超参文件

||---prepro.py 数据预处理:根据训练数据生成词典等

||---data_load.py 加载数据处理数据

||---utils.py 工具类文件:保存参数、加载参数、模型评估

(3)模型相关

||---train.py 训练入口文件:

||---test.py 测试数据入口文件:

||--- model.py 模型网络结构

||--- modules.py 模型各个组件的实现

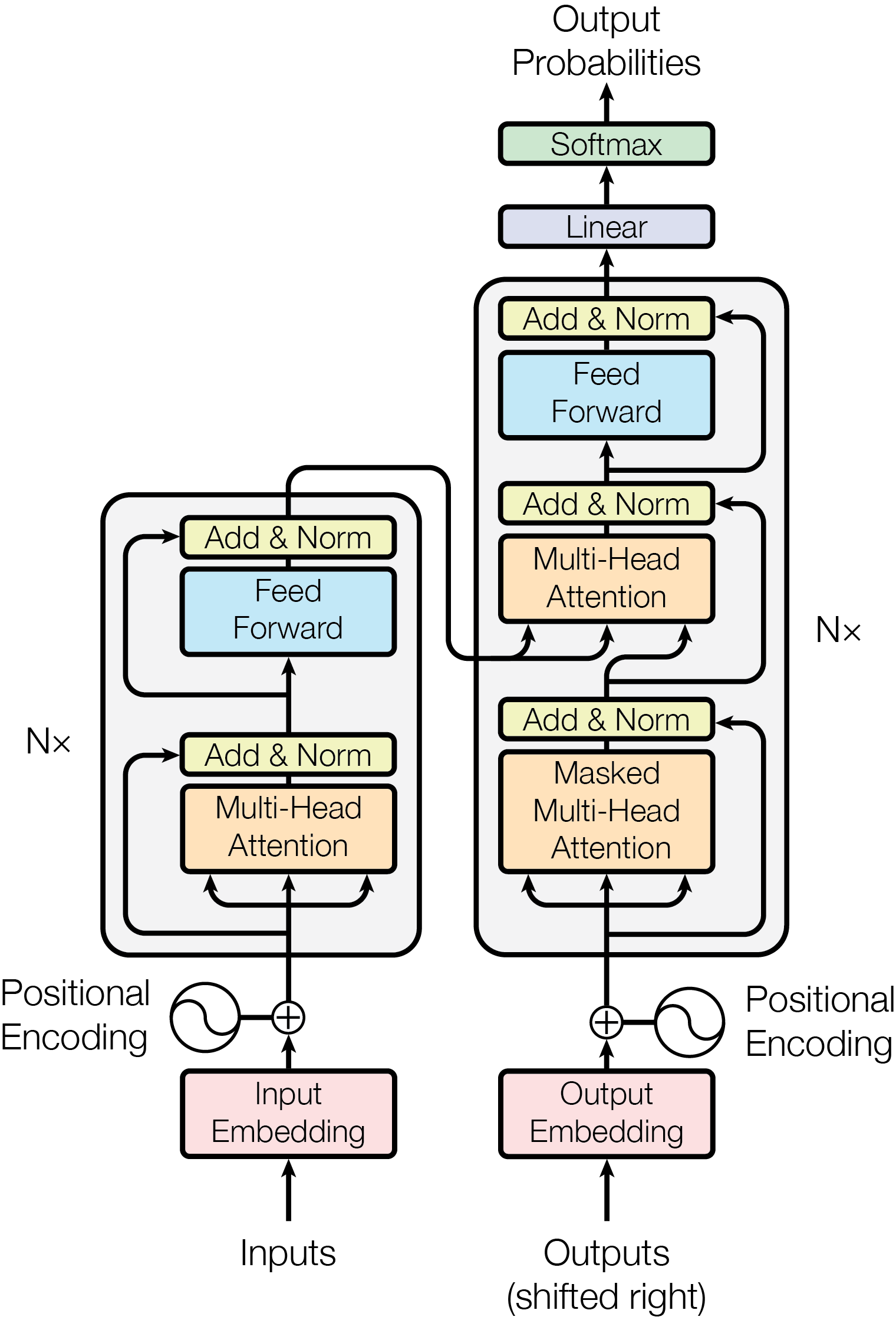

三、架构

四、代码

(1).train.py

# -*- coding: utf-8 -*-

#/usr/bin/python3

'''

Feb. 2019 by kyubyong park.

kbpark.linguist@gmail.com.

https://www.github.com/kyubyong/transformer

'''

import tensorflow as tf

from model import Transformer

from tqdm import tqdm

from data_load import get_batch

from utils import save_hparams, save_variable_specs, get_hypotheses, calc_bleu

import os

from hparams import Hparams

import math

import logging

logging.basicConfig(level=logging.INFO)

# 参数

logging.info("# hparams")

hparams = Hparams()

parser = hparams.parser

hp = parser.parse_args()

save_hparams(hp, hp.logdir)

logging.info("# Prepare train/eval batches")

#数据预处理

# 处理训练集数据,生成一个batch

train_batches, num_train_batches, num_train_samples = get_batch(hp.train1, hp.train2,

hp.maxlen1, hp.maxlen2,

hp.vocab, hp.batch_size,

shuffle=True)

# 处理验证集集数据,生成一个batch

eval_batches, num_eval_batches, num_eval_samples = get_batch(hp.eval1, hp.eval2,

100000, 100000,

hp.vocab, hp.batch_size,

shuffle=False)

# create a iterator of the correct shape and type

iter = tf.data.Iterator.from_structure(train_batches.output_types, train_batches.output_shapes)

xs, ys = iter.get_next()

train_init_op = iter.make_initializer(train_batches)

eval_init_op = iter.make_initializer(eval_batches)

logging.info("# Load model")

#实例化模型

m = Transformer(hp)

#传入x,y(label)开始训练模型

loss, train_op, global_step, train_summaries = m.train(xs, ys)

# 传入x,y(label)开始验证模型

y_hat, eval_summaries = m.eval(xs, ys)

# y_hat = m.infer(xs, ys)

logging.info("# Session")

saver = tf.train.Saver(max_to_keep=hp.num_epochs)

with tf.Session() as sess:

#从训练log中恢复模型

ckpt = tf.train.latest_checkpoint(hp.logdir)

# 如果没有模型初始化

if ckpt is None:

logging.info("Initializing from scratch")

sess.run(tf.global_variables_initializer())

save_variable_specs(os.path.join(hp.logdir, "specs"))

# 如果有模型恢复模型

else:

saver.restore(sess, ckpt)

# 指定模型保存文件路径和网络结构

summary_writer = tf.summary.FileWriter(hp.logdir, sess.graph)

sess.run(train_init_op)

# 计算总batch数量 num_train_batches:训练一轮batch的数量个数

total_steps = hp.num_epochs * num_train_batches

# 当前batch步数

_gs = sess.run(global_step)

#循环batch数量开始训练,tqdm是为了显示进度条

for i in tqdm(range(_gs, total_steps+1)):

_, _gs, _summary = sess.run([train_op, global_step, train_summaries])

print(train_op)

epoch = math.ceil(_gs / num_train_batches)

summary_writer.add_summary(_summary, _gs)

# 当训练一轮完成

if _gs and _gs % num_train_batches == 0:

logging.info("epoch {} is done".format(epoch))

_loss = sess.run(loss) # train loss

logging.info("# test evaluation")

_, _eval_summaries = sess.run([eval_init_op, eval_summaries])

summary_writer.add_summary(_eval_summaries, _gs)

logging.info("# get hypotheses")

hypotheses = get_hypotheses(num_eval_batches, num_eval_samples, sess, y_hat, m.idx2token)

logging.info("# write results")

model_output = "iwslt2016_E%02dL%.2f" % (epoch, _loss)

if not os.path.exists(hp.evaldir): os.makedirs(hp.evaldir)

translation = os.path.join(hp.evaldir, model_output)

with open(translation, 'w') as fout:

fout.write("\n".join(hypotheses))

# 验证模型

logging.info("# calc bleu score and append it to translation")

calc_bleu(hp.eval3, translation)

# 保存模型

logging.info("# save models")

ckpt_name = os.path.join(hp.logdir, model_output)

saver.save(sess, ckpt_name, global_step=_gs)

logging.info("after training of {} epochs, {} has been saved.".format(epoch, ckpt_name))

logging.info("# fall back to train mode")

sess.run(train_init_op)

summary_writer.close()

logging.info("Done")

(2).model.py

# -*- coding: utf-8 -*-

# /usr/bin/python3

'''

Feb. 2019 by kyubyong park.

kbpark.linguist@gmail.com.

https://www.github.com/kyubyong/transformer

Transformer network

'''

import tensorflow as tf

from data_load import load_vocab

from modules import get_token_embeddings, ff, positional_encoding, multihead_attention, label_smoothing, noam_scheme

from utils import convert_idx_to_token_tensor

from tqdm import tqdm

import logging

logging.basicConfig(level=logging.INFO)

class Transformer:

'''

xs: tuple of

x: int32 tensor. (N, T1)

x_seqlens: int32 tensor. (N,)

sents1: str tensor. (N,)

ys: tuple of

decoder_input: int32 tensor. (N, T2)

y: int32 tensor. (N, T2)

y_seqlen: int32 tensor. (N, )

sents2: str tensor. (N,)

training: boolean.

'''

def __init__(self, hp):

self.hp = hp

self.token2idx, self.idx2token = load_vocab(hp.vocab)

# 字向量(tooke向量),将待翻译的每个字映射到目标词表中

self.embeddings = get_token_embeddings(self.hp.vocab_size, self.hp.d_model, zero_pad=True)

def encode(self, xs, training=True):

'''

Returns

memory: encoder outputs. (N, T1, d_model)

'''

with tf.variable_scope("encoder", reuse=tf.AUTO_REUSE):

x, seqlens, sents1 = xs

# src_masks:x的masks

# [[False False False False False True True True True True True True

# True True True True True True True True True True True]

# [False False False False False False False False False False True True

# True True True True True True True True True True True]

src_masks = tf.math.equal(x, 0) # (N, T1)

# embedding

enc = tf.nn.embedding_lookup(self.embeddings, x) # (N, T1, d_model)

enc *= self.hp.d_model**0.5 # scale

# 将位置信息加入

enc += positional_encoding(enc, self.hp.maxlen1)

enc = tf.layers.dropout(enc, self.hp.dropout_rate, training=training)

## Blocks

for i in range(self.hp.num_blocks):

with tf.variable_scope("num_blocks_{}".format(i), reuse=tf.AUTO_REUSE):

# self-attention

enc = multihead_attention(queries=enc,

keys=enc,

values=enc,

key_masks=src_masks,

num_heads=self.hp.num_heads,

dropout_rate=self.hp.dropout_rate,

training=training,

causality=False)

# feed forward

enc = ff(enc, num_units=[self.hp.d_ff, self.hp.d_model])

memory = enc

return memory, sents1, src_masks

def decode(self, ys, memory, src_masks, training=True):

'''

memory: encoder outputs. (N, T1, d_model)

src_masks: (N, T1)

Returns

logits: (N, T2, V). float32.

y_hat: (N, T2). int32 预测值: [[1,2133,2123,4444,...],[1,2342,43434,....]]

y: (N, T2). int32 label值:[[1,2133,2123,4444,...],[1,2342,43434,....]]

sents2: (N,). string.

'''

with tf.variable_scope("decoder", reuse=tf.AUTO_REUSE):

# decoder_inputs:(N,T2) 是开头加了<s> 去掉最后一个,目的是要添加到x中预测下一个字

# decoder_inputs:[[ 2 40 496 305 25112 31943 0 0 0],[2 142 2823 77 2960 532 368 1230]]

# y:就是label:(N,T2)

# y:[[ 40 496 305 25112 31943 3 0 0],[ 142 2823 77 2960 532 368 1230 319

# seqlens:[ 6 24 14 41]

# sents2:(N)

# sents2: [b'_I _am _my _connectome .', b"_And _though _that _company _has _]

decoder_inputs, y, seqlens, sents2 = ys

# tgt_masks:(N,T2)

# [[False False False False False False False False False False True True

# True True True True True True True True True True True True

# True True True True]

# [False False False False False False False False False True True True

# True True True True True True True True True True True True

# True True True True]

tgt_masks = tf.math.equal(decoder_inputs, 0) # (N, T2)

# embedding 将字索引id转换为向量

# self.embeddings:字向量:(V,E)

# dec:(N, T2, d_model)

dec = tf.nn.embedding_lookup(self.embeddings, decoder_inputs) # (N, T2, d_model)

dec *= self.hp.d_model ** 0.5 # scale

# 加上位置张量 positional_encoding

# dec:(N, T2, d_model)

dec += positional_encoding(dec, self.hp.maxlen2)

# dropout

dec = tf.layers.dropout(dec, self.hp.dropout_rate, training=training)

# Blocks

for i in range(self.hp.num_blocks):

with tf.variable_scope("num_blocks_{}".format(i), reuse=tf.AUTO_REUSE):

# Masked self-attention (Note that causality is True at this time)

dec = multihead_attention(queries=dec,

keys=dec,

values=dec,

key_masks=tgt_masks,

num_heads=self.hp.num_heads,

dropout_rate=self.hp.dropout_rate,

training=training,

causality=True,

scope="self_attention")

# Vanilla attention

dec = multihead_attention(queries=dec,

keys=memory,

values=memory,

key_masks=src_masks,

num_heads=self.hp.num_heads,

dropout_rate=self.hp.dropout_rate,

training=training,

causality=False,

scope="vanilla_attention")

### Feed Forward 前馈网络

dec = ff(dec, num_units=[self.hp.d_ff, self.hp.d_model])

# Final linear projection (embedding weights are shared)

#将参数矩阵转置

weights = tf.transpose(self.embeddings) # (d_model, vocab_size)

#tf.einsum:爱因斯坦求和 transformer之后的字矩阵与权重矩阵相乘得到词典中每个字的概率

#logits:(N, T2,[0,0.999,0,.....,0]

logits = tf.einsum('ntd,dk->ntk', dec, weights) # (N, T2, vocab_size)

#tf.argmax:返回某维度最大的索引

# y_hat:(N, T2) 值:[[ 5768 7128 7492 3546 7128 3947 21373 7128 7128 7128 7492 4501

# 7128 7128 14651],]

y_hat = tf.to_int32(tf.argmax(logits, axis=-1))

#参考注释

return logits, y_hat, y, sents2

def train(self, xs, ys):

'''

Returns

loss: scalar.

train_op: training operation

global_step: scalar.

summaries: training summary node

'''

# forward

memory, sents1, src_masks = self.encode(xs)

logits, preds, y, sents2 = self.decode(ys, memory, src_masks)

# train scheme

# y:(N, T2)值:[[ 5768 7128 7492 7128 7492 4501 7128 7128 14651],[ 5768 7128 7492 7128 7492 4501 7128 7128 14651]]

# y_:(N, T2, vocab_size); 值:(N, T2,[0,0.999,0,.....,0]

y_ = label_smoothing(tf.one_hot(y, depth=self.hp.vocab_size))

# 预测值和label做交叉熵,生成损失值

# logits:预测id的概率 (N, T2,[0,0.999,0,.....,0])

# ce: (N,T2) 例如:(4, 42) 值:array([[ 6.8254533, 6.601975 , 6.5515084...,9.603574 , 10.001306 ],[6.8502007, 6.645137...]】,每个字粒度的损失

ce = tf.nn.softmax_cross_entropy_with_logits_v2(logits=logits, labels=y_)

# nonpadding:(N,T2) 例如:(4, 42) 值:[[[1., 1., 1.,0,0,0],[1., 1., 1., 1., 1., 1.,.....]

nonpadding = tf.to_float(tf.not_equal(y, self.token2idx["<pad>"])) # 0: <pad>

# tf.reduce_sum 按照某一维度求和 不指定axis,默认所有维度

# ce * nonpadding:只求没有填充的词的损失,padding的去掉了 tf.reduce_sum(nonpadding):个数相加为了求平均

loss = tf.reduce_sum(ce * nonpadding) / (tf.reduce_sum(nonpadding) + 1e-7)

global_step = tf.train.get_or_create_global_step()

# 根据训练步数,动态改变学习率

lr = noam_scheme(self.hp.lr, global_step, self.hp.warmup_steps)

#定义优化器

optimizer = tf.train.AdamOptimizer(lr)

train_op = optimizer.minimize(loss, global_step=global_step)

tf.summary.scalar('lr', lr)

tf.summary.scalar("loss", loss)

tf.summary.scalar("global_step", global_step)

summaries = tf.summary.merge_all()

return loss, train_op, global_step, summaries

def eval(self, xs, ys):

'''Predicts autoregressively

At inference, input ys is ignored.

Returns

y_hat: (N, T2)

'''

decoder_inputs, y, y_seqlen, sents2 = ys

decoder_inputs = tf.ones((tf.shape(xs[0])[0], 1), tf.int32) * self.token2idx["<s>"]

ys = (decoder_inputs, y, y_seqlen, sents2)

memory, sents1, src_masks = self.encode(xs, False)

logging.info("Inference graph is being built. Please be patient.")

for _ in tqdm(range(self.hp.maxlen2)):

logits, y_hat, y, sents2 = self.decode(ys, memory, src_masks, False)

if tf.reduce_sum(y_hat, 1) == self.token2idx["<pad>"]: break

_decoder_inputs = tf.concat((decoder_inputs, y_hat), 1)

ys = (_decoder_inputs, y, y_seqlen, sents2)

# monitor a random sample

n = tf.random_uniform((), 0, tf.shape(y_hat)[0]-1, tf.int32)

sent1 = sents1[n]

pred = convert_idx_to_token_tensor(y_hat[n], self.idx2token)

sent2 = sents2[n]

tf.summary.text("sent1", sent1)

tf.summary.text("pred", pred)

tf.summary.text("sent2", sent2)

summaries = tf.summary.merge_all()

return y_hat, summaries

(3).modules.py 模块

# -*- coding: utf-8 -*-

#/usr/bin/python3

'''

Feb. 2019 by kyubyong park.

kbpark.linguist@gmail.com.

https://www.github.com/kyubyong/transformer.

Building blocks for Transformer

'''

import numpy as np

import tensorflow as tf

# laye 数据标准化,

def ln(inputs, epsilon = 1e-8, scope="ln"):

'''Applies layer normalization. See https://arxiv.org/abs/1607.06450.

inputs: A tensor with 2 or more dimensions, where the first dimension has `batch_size`.

epsilon: A floating number. A very small number for preventing ZeroDivision Error.

scope: Optional scope for `variable_scope`.

Returns:

A tensor with the same shape and data dtype as `inputs`.

'''

with tf.variable_scope(scope, reuse=tf.AUTO_REUSE):

# inputs (2, 28, 512)

# [[[-2.954033 1.695996 -3.1413105 ... -4.018251 -1.3023977

# -0.30223972]

# [-2.3530521 1.7192748 -3.351814 ... -2.8273482 -0.65232337

# -0.4264419 ]

# [-2.2563033 1.5593072 -3.2775855 ... -3.2531385 -0.9977432

# -0.35023227]

# ...

# [-0.6843967 -1.003483 1.6845378 ... -0.31852347 2.5649729

# 0.804183 ]

# [-0.9206941 -1.1214577 2.0042856 ... 0.09208354 3.1693528

# 1.0978277 ]

# [-0.74338186 -0.9217373 1.9481943 ... 0.17723289 3.5957296

# 1.2593844 ]]

#

# [[-2.517493 0.91683435 -3.4142447 ... -3.9844556 -1.1842881

# -0.72232884]

# [-1.8562554 1.1425755 -3.2166462 ... -4.044012 -1.2189282

# -0.38615695]

# [-2.0858154 1.4967486 -2.6159432 ... -3.5880928 -0.77991307

# -0.33104044]

inputs_shape = inputs.get_shape() # (2, 28, 512)

params_shape = inputs_shape[-1:]

# mean:每个字的均值:(N,L,1):batch=2 (2, 22, 1)

# [[[-0.06484328]

# [-0.01978662]

# [-0.05463864]

# [-0.03227896]

# [-0.05496355]

# [ 0.00383393]

# [-0.00831935]

# [-0.04848529]

# [-0.05807229]

# [-0.02759192]

# [-0.04658262]

# [-0.044351 ]

# [-0.0404046 ]

# [-0.01897928]

# [-0.03630898]

# [-0.04527371]

# [ 0.00215435]

# [-0.02535543]

# [-0.02192712]

# [-0.03002348]

# [-0.01454192]

# [-0.02426025]]

#

# [[-0.01858747]

# [-0.09324092]

# variance:每个字的方差:(N,L,1):batch=2 (2, 22, 1)

# [[[10.156523 ]

# [ 6.320237 ]

# [ 7.1246476]

# [ 7.285763 ]

# [ 5.4343634]

# [ 7.8283257]

# [ 6.818144 ]

# [ 7.768257 ]

# [ 7.3524065]

# [ 6.89916 ]

# [ 6.85674 ]

# [ 7.8054104]

# [ 7.141796 ]

# [ 6.5306134]

# [ 5.97371 ]

# [ 6.9241476]

# [ 6.4381695]

# [ 7.5288787]

# [ 7.336317 ]

# [ 6.9332967]

# [ 5.7453003]

# [ 6.994274 ]]

#

# [[ 5.858997 ]

# [ 6.8444324]

# [ 4.2397 ]

mean, variance = tf.nn.moments(inputs, [-1], keep_dims=True)

# beta shape: (512,) 都是0附近的

# [ 6.42933836e-03 -7.10692781e-04 3.21562425e-03 3.74931638e-04

# -4.57212422e-03 -2.78607651e-04 -1.94830136e-05 -2.29233573e-03

# -3.16770235e-03 -6.85446896e-04 1.31265656e-03 3.29568808e-04

# -3.31255049e-03 2.30989186e-03 -4.35887882e-03 -1.96260633e-04

# -9.28307883e-03 5.05952770e-03 -4.32743737e-03 2.50508357e-02

beta= tf.get_variable("beta", params_shape, initializer=tf.zeros_initializer())

# gamma shape: (512,) 都是1附近的

# [0.9971061 1.0393478 0.99570525 1.0020051 1.0036978 0.9996209

# 1.0018373 1.0109453 1.012793 0.9991118 1.0150126 1.0089304

# 1.0066382 1.0279335 1.0096924 1.0351689 0.9996498 1.0024785

# 0.988923 1.0238222 1.0073771 0.99466056 1.0291134 1.0062896

# 0.99483615 1.0175365 1.0021777 0.9909473 1.0064633 1.0024587

gamma = tf.get_variable("gamma", params_shape, initializer=tf.ones_initializer())

# normalized :(2, 28, 512) 和上面的inputs是同一个数据

# [[[-1.5576326 0.8367065 -1.6540633 ... -2.1056075 -0.7071917

# -0.19220194]

# [-1.1740364 0.8412268 -1.6682914 ... -1.4087503 -0.33240056

# -0.22061913]

# [-1.2856865 0.8827096 -1.8660771 ... -1.8521839 -0.5704518

# -0.20247398]

# ...

# [-0.36244592 -0.5411275 0.9641068 ... -0.15756473 1.4571316

# 0.47112694]

# [-0.4678502 -0.57143646 1.0413258 ... 0.05470374 1.6424552

# 0.57362866]

# [-0.3773605 -0.47287214 1.064013 ... 0.11564048 1.9462892

# 0.69514644]]

normalized = (inputs - mean) / ( (variance + epsilon) ** (.5) )

# 最终outpus:

outputs = gamma * normalized + beta

return outputs

#构造字向量矩阵

def get_token_embeddings(vocab_size, num_units, zero_pad=True):

'''Constructs token embedding matrix.

Note that the column of index 0's are set to zeros.

vocab_size: scalar. V.

num_units: embedding dimensionalty. E.

zero_pad: Boolean. If True, all the values of the first row (id = 0) should be constant zero

To apply query/key masks easily, zero pad is turned on.

Returns

weight variable: (V, E)

'''

with tf.variable_scope("shared_weight_matrix"):

embeddings = tf.get_variable('weight_mat',

dtype=tf.float32,

shape=(vocab_size, num_units),

initializer=tf.contrib.layers.xavier_initializer())

if zero_pad:

embeddings = tf.concat((tf.zeros(shape=[1, num_units]),

embeddings[1:, :]), 0)

return embeddings

def scaled_dot_product_attention(Q, K, V, key_masks,

causality=False, dropout_rate=0.,

training=True,

scope="scaled_dot_product_attention"):

'''See 3.2.1.

Q: Packed queries. 3d tensor. [N, T_q, d_k]. 这个地方错了(h*N, T_q, d_model/h)

K: Packed keys. 3d tensor. [N, T_k, d_k]. 这个地方错了(h*N, T_q, d_model/h)

V: Packed values. 3d tensor. [N, T_k, d_v]. 这个地方错了(h*N, T_q, d_model/h)

key_masks: A 2d tensor with shape of [N, key_seqlen]

causality: If True, applies masking for future blinding

dropout_rate: A floating point number of [0, 1].

training: boolean for controlling droput

scope: Optional scope for `variable_scope`.

'''

with tf.variable_scope(scope, reuse=tf.AUTO_REUSE):

d_k = Q.get_shape().as_list()[-1]

# dot product:点积:Q和K的点积

outputs = tf.matmul(Q, tf.transpose(K, [0, 2, 1])) # (N, T_q, T_k)

# scale

outputs /= d_k ** 0.5

# key masking

# # key_masks:(N,T2)

# # [[False False False False False False False False False False True True

# # True True True True True True True True True True True True

# # True True True True]

# # [False False False False False False False False False True True True

# # True True True True True True True True True True True True

# # True True True True]

outputs = mask(outputs, key_masks=key_masks, type="key")

# causality or future blinding masking

if causality:

outputs = mask(outputs, type="future")

# softmax

outputs = tf.nn.softmax(outputs)

attention = tf.transpose(outputs, [0, 2, 1])

tf.summary.image("attention", tf.expand_dims(attention[:1], -1))

# # query masking

# outputs = mask(outputs, Q, K, type="query")

# dropout

outputs = tf.layers.dropout(outputs, rate=dropout_rate, training=training)

# weighted sum (context vectors)

# tf.matmul:矩阵相乘

outputs = tf.matmul(outputs, V) # (N, T_q, d_v)

return outputs

def mask(inputs, key_masks=None, type=None):

"""Masks paddings on keys or queries to inputs

inputs: 3d tensor. (h*N, T_q, T_k)

key_masks: 3d tensor. (N, 1, T_k)

type: string. "key" | "future"

e.g.,

>> inputs = tf.zeros([2, 2, 3], dtype=tf.float32)

>> key_masks = tf.constant([[0., 0., 1.],

[0., 1., 1.]])

>> mask(inputs, key_masks=key_masks, type="key")

array([[[ 0.0000000e+00, 0.0000000e+00, -4.2949673e+09],

[ 0.0000000e+00, 0.0000000e+00, -4.2949673e+09]],

[[ 0.0000000e+00, -4.2949673e+09, -4.2949673e+09],

[ 0.0000000e+00, -4.2949673e+09, -4.2949673e+09]],

[[ 0.0000000e+00, 0.0000000e+00, -4.2949673e+09],

[ 0.0000000e+00, 0.0000000e+00, -4.2949673e+09]],

[[ 0.0000000e+00, -4.2949673e+09, -4.2949673e+09],

[ 0.0000000e+00, -4.2949673e+09, -4.2949673e+09]]], dtype=float32)

"""

padding_num = -2 ** 32 + 1

if type in ("k", "key", "keys"):

key_masks = tf.to_float(key_masks)

# tf.tile:平铺;The output tensor's i'th dimension has input.dims(i) * multiples[i] elements,

# 因为Input进行了多头分割,所有标签也要扩展

# batch=2的时候,key_masks:(16, 34)

#

key_masks = tf.tile(key_masks, [tf.shape(inputs)[0] // tf.shape(key_masks)[0], 1]) # (h*N, seqlen)

# tf.expand_dims:Returns a tensor with an additional dimension inserted at index axis.

# batch=2的时候,key_masks: (16, 1, 34),

#[[[0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]]

# [[0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]]

key_masks = tf.expand_dims(key_masks, 1) # (h*N, 1, seqlen)

outputs = inputs + key_masks * padding_num

# elif type in ("q", "query", "queries"):

# # Generate masks

# masks = tf.sign(tf.reduce_sum(tf.abs(queries), axis=-1)) # (N, T_q)

# masks = tf.expand_dims(masks, -1) # (N, T_q, 1)

# masks = tf.tile(masks, [1, 1, tf.shape(keys)[1]]) # (N, T_q, T_k)

#

# # Apply masks to inputs

# outputs = inputs*masks

elif type in ("f", "future", "right"):

# tf.ones_like:Creates a tensor of all ones that has the same shape as the input.

# diag_vals shape: (20, 20)

# [[1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]]

diag_vals = tf.ones_like(inputs[0, :, :]) # (T_q, T_k)

# tril:生成一个下三角 shape: (20, 20)

# [[1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 0. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 0. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 0.]

# [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]]

tril = tf.linalg.LinearOperatorLowerTriangular(diag_vals).to_dense() # (T_q, T_k)

#batch取得2,head=8则: future_masks: (16, 20, 20),其中20是每句话词的个数,每一句话生成一个下三角

# [[[1. 0. 0. ... 0. 0. 0.]

# [1. 1. 0. ... 0. 0. 0.]

# [1. 1. 1. ... 0. 0. 0.]

# ...

# [1. 1. 1. ... 1. 0. 0.]

# [1. 1. 1. ... 1. 1. 0.]

# [1. 1. 1. ... 1. 1. 1.]]

#

# [[1. 0. 0. ... 0. 0. 0.]

# [1. 1. 0. ... 0. 0. 0.]

# [1. 1. 1. ... 0. 0. 0.]

# ...

# [1. 1. 1. ... 1. 0. 0.]

# [1. 1. 1. ... 1. 1. 0.]

# [1. 1. 1. ... 1. 1. 1.]]

future_masks = tf.tile(tf.expand_dims(tril, 0), [tf.shape(inputs)[0], 1, 1]) # (N, T_q, T_k)

# paddings:按照 paddings 的shap生成一个填充矩阵

# [[[-4.2949673e+09 -4.2949673e+09 -4.2949673e+09 ... -4.2949673e+09

# -4.2949673e+09 -4.2949673e+09]

# [-4.2949673e+09 -4.2949673e+09 -4.2949673e+09 ... -4.2949673e+09

# -4.2949673e+09 -4.2949673e+09]

# [-4.2949673e+09 -4.2949673e+09 -4.2949673e+09 ... -4.2949673e+09

# -4.2949673e+09 -4.2949673e+09]

# ...

# [-4.2949673e+09 -4.2949673e+09 -4.2949673e+09 ... -4.2949673e+09

# -4.2949673e+09 -4.2949673e+09]

# [-4.2949673e+09 -4.2949673e+09 -4.2949673e+09 ... -4.2949673e+09

# -4.2949673e+09 -4.2949673e+09]

# [-4.2949673e+09 -4.2949673e+09 -4.2949673e+09 ... -4.2949673e+09

# -4.2949673e+09 -4.2949673e+09]]

paddings = tf.ones_like(future_masks) * padding_num

# tf.where(condition,paddings, inputs) 按照:condition=true的部位,将padding 填充到input中

# [[[ 4.43939781e+01 -4.29496730e+09 -4.29496730e+09 ... -4.29496730e+09

# -4.29496730e+09 -4.29496730e+09]

# [ 3.41685486e+01 -1.06252086e+00 -4.29496730e+09 ... -4.29496730e+09

# -4.29496730e+09 -4.29496730e+09]

# [ 3.96921425e+01 2.78434825e+00 4.21420937e+01 ... -4.29496730e+09

# -4.29496730e+09 -4.29496730e+09]

# ...

# [ 5.53764381e+01 2.47523355e+00 5.91777534e+01 ... -1.21471321e+02

# -4.29496730e+09 -4.29496730e+09]

# [ 5.32606239e+01 4.99608231e+00 5.68281059e+01 ... -1.08563034e+02

# -4.29496730e+09 -4.29496730e+09]

# [ 4.16031532e+01 1.54854858e+00 4.41170464e+01 ... -8.69959717e+01

# -4.29496730e+09 -4.29496730e+09]]

#

# [[-1.77314243e+01 -4.29496730e+09 -4.29496730e+09 ... -4.29496730e+09

# -4.29496730e+09 -4.29496730e+09]

# [-1.86583366e+01 -1.04054117e+01 -4.29496730e+09 ... -4.29496730e+09

# -4.29496730e+09 -4.29496730e+09]

# [-2.13199158e+01 -1.20762053e+01 -6.59975433e+01 ... -4.29496730e+09

# -4.29496730e+09 -4.29496730e+09]

# ...

# [-2.05263672e+01 -1.20537119e+01 -6.91874313e+01 ... -8.82377319e+01

# -4.29496730e+09 -4.29496730e+09]

# [-1.61425571e+01 -8.80572414e+00 -5.09573174e+01 ... -6.37179871e+01

# 1.55839844e+01 -4.29496730e+09]

# [-2.01059513e+01 -1.21201067e+01 -6.66899109e+01 ... -8.49668579e+01

# 2.01012688e+01 -1.04354706e+02]]

outputs = tf.where(tf.equal(future_masks, 0), paddings, inputs)

else:

print("Check if you entered type correctly!")

return outputs

# 多头注意力机制

def multihead_attention(queries, keys, values, key_masks,

num_heads=8,

dropout_rate=0,

training=True,

causality=False,

scope="multihead_attention"):

'''Applies multihead attention. See 3.2.2

queries: A 3d tensor with shape of [N, T_q, d_model].

keys: A 3d tensor with shape of [N, T_k, d_model].

values: A 3d tensor with shape of [N, T_k, d_model].

key_masks: A 2d tensor with shape of [N, key_seqlen]

num_heads: An int. Number of heads.

dropout_rate: A floating point number.

training: Boolean. Controller of mechanism for dropout.

causality: Boolean. If true, units that reference the future are masked.

scope: Optional scope for `variable_scope`.

Returns

A 3d tensor with shape of (N, T_q, C)

'''

d_model = queries.get_shape().as_list()[-1]

with tf.variable_scope(scope, reuse=tf.AUTO_REUSE):

# Linear projections

# 先加一层

Q = tf.layers.dense(queries, d_model, use_bias=True) # (N, T_q, d_model)

K = tf.layers.dense(keys, d_model, use_bias=True) # (N, T_k, d_model)

V = tf.layers.dense(values, d_model, use_bias=True) # (N, T_k, d_model)

# Split and concat

# 切割与合并,这里体现的多头注意力机制

Q_ = tf.concat(tf.split(Q, num_heads, axis=2), axis=0) # (h*N, T_q, d_model/h)

K_ = tf.concat(tf.split(K, num_heads, axis=2), axis=0) # (h*N, T_k, d_model/h)

V_ = tf.concat(tf.split(V, num_heads, axis=2), axis=0) # (h*N, T_k, d_model/h)

# 这里是重点:Attention计算

# 传入的 src_masks或者 tgt_masks的值

outputs = scaled_dot_product_attention(Q_, K_, V_, key_masks, causality, dropout_rate, training)

# Restore shape

# 这里将多头注意力结果恢复N

outputs = tf.concat(tf.split(outputs, num_heads, axis=0), axis=2 ) # (N, T_q, d_model)

# Residual connection

outputs += queries

# Normalize

outputs = ln(outputs)

return outputs

def ff(inputs, num_units, scope="positionwise_feedforward"):

'''position-wise feed forward net. See 3.3

inputs: A 3d tensor with shape of [N, T, C].

num_units: A list of two integers.

scope: Optional scope for `variable_scope`.

Returns:

A 3d tensor with the same shape and dtype as inputs

'''

with tf.variable_scope(scope, reuse=tf.AUTO_REUSE):

# Inner layer

# inputs

# outputs: (2, 21, 2048)

# [[[0. 0. 0. ... 6.132974 0. 0. ]

# [0. 0. 0. ... 7.307194 0. 0. ]

# [0. 0. 0. ... 7.982828 0. 0. ]

# ...

# [0. 0. 0. ... 7.964889 0. 0. ]

# [0. 0. 0. ... 5.4325767 0. 0. ]

# [0. 0. 0. ... 7.275622 0. 0. ]]

#

# [[0. 0. 0. ... 7.5862374 0. 0. ]

# [0. 0. 0. ... 5.2648916 0. 0. ]

# [0. 0.23242699 0. ... 6.187096 0. 0. ]

# ...

# [0. 0. 0. ... 8.233661 0. 0. ]

# [0. 1.2058965 0. ... 7.485455 0. 0. ]

# [0. 0. 0. ... 8.30237 0. 0. ]]]

outputs = tf.layers.dense(inputs, num_units[0], activation=tf.nn.relu)

# Outer layer

# outputs (2, 21, 512)

# [[[-25.37711 1.3692019 -50.80832 ... 0.9118566 -24.534058

# -29.246645 ]

# [-28.563698 4.2556305 -48.531235 ... -0.37409064 -25.03862

# -28.988926 ]

# [-35.380463 9.315982 -49.559715 ... -2.7038898 -30.601368

# -33.075985 ]

# ...

# [-35.643818 9.848609 -48.638973 ... -3.1406584 -30.439838

# -32.913677 ]

# [-21.56209 -0.767092 -48.07494 ... 3.6515896 -22.752474

# -24.482904 ]

# [-33.58284 8.678018 -46.875854 ... -2.9922912 -28.830814

# -31.506397 ]]

#

# [[-33.383442 9.613742 -46.971367 ... -3.2966337 -28.788752

# -32.695858 ]

# [-19.326656 -3.1725218 -50.551548 ... 3.6544466 -20.554945

# -25.621178 ]

# [-30.288332 17.503975 -24.142347 ... -9.287842 -23.152536

# -29.06433 ]

# ...

# [-35.35156 9.312039 -49.94821 ... -2.5631473 -30.52241

# -33.088287 ]

# [-37.59673 21.043293 -29.895994 ... -10.287443 -30.355291

# -35.290684 ]

# [-35.986378 9.922715 -50.2701 ... -3.6265292 -30.689642

# -34.769424 ]]]

outputs = tf.layers.dense(outputs, num_units[1])

# Residual connection

outputs += inputs

# Normalize

outputs = ln(outputs)

return outputs

def label_smoothing(inputs, epsilon=0.1):

'''Applies label smoothing. See 5.4 and https://arxiv.org/abs/1512.00567.

inputs: 3d tensor. [N, T, V], where V is the number of vocabulary.

epsilon: Smoothing rate.

For example,

import tensorflow as tf

inputs = tf.convert_to_tensor([[[0, 0, 1],

[0, 1, 0],

[1, 0, 0]],

[[1, 0, 0],

[1, 0, 0],

[0, 1, 0]]], tf.float32)

outputs = label_smoothing(inputs)

with tf.Session() as sess:

print(sess.run([outputs]))

>>

[array([[[ 0.03333334, 0.03333334, 0.93333334],

[ 0.03333334, 0.93333334, 0.03333334],

[ 0.93333334, 0.03333334, 0.03333334]],

[[ 0.93333334, 0.03333334, 0.03333334],

[ 0.93333334, 0.03333334, 0.03333334],

[ 0.03333334, 0.93333334, 0.03333334]]], dtype=float32)] '''

V = inputs.get_shape().as_list()[-1] # number of channels

return ((1-epsilon) * inputs) + (epsilon / V)

#位置向量

def positional_encoding(inputs,

maxlen,

masking=True,

scope="positional_encoding"):

'''Sinusoidal Positional_Encoding. See 3.5

inputs: 3d tensor. (N, T, E)

maxlen: scalar. Must be >= T

masking: Boolean. If True, padding positions are set to zeros.

scope: Optional scope for `variable_scope`.

returns

3d tensor that has the same shape as inputs.

'''

E = inputs.get_shape().as_list()[-1] # static

N, T = tf.shape(inputs)[0], tf.shape(inputs)[1] # dynamic

with tf.variable_scope(scope, reuse=tf.AUTO_REUSE):

# position indices

position_ind = tf.tile(tf.expand_dims(tf.range(T), 0), [N, 1]) # (N, T)

# First part of the PE function: sin and cos argument

position_enc = np.array([

[pos / np.power(10000, (i-i%2)/E) for i in range(E)]

for pos in range(maxlen)])

# Second part, apply the cosine to even columns and sin to odds.

position_enc[:, 0::2] = np.sin(position_enc[:, 0::2]) # dim 2i

position_enc[:, 1::2] = np.cos(position_enc[:, 1::2]) # dim 2i+1

position_enc = tf.convert_to_tensor(position_enc, tf.float32) # (maxlen, E)

# lookup

outputs = tf.nn.embedding_lookup(position_enc, position_ind)

# masks

if masking:

outputs = tf.where(tf.equal(inputs, 0), inputs, outputs)

return tf.to_float(outputs)

# 改变学习率算法:学习率衰减

def noam_scheme(init_lr, global_step, warmup_steps=4000.):

'''Noam scheme learning rate decay

init_lr: initial learning rate. scalar.

global_step: scalar.

warmup_steps: scalar. During warmup_steps, learning rate increases

until it reaches init_lr.

'''

step = tf.cast(global_step + 1, dtype=tf.float32)

return init_lr * warmup_steps ** 0.5 * tf.minimum(step * warmup_steps ** -1.5, step ** -0.5)

最主要的就是上面的三个文件了。

Q,K,V的理解

1.https://blog.csdn.net/qq_42004289/article/details/85990009

2.https://www.bilibili.com/video/BV1J441137V6?from=search&seid=12093118819715772273

3.https://charon.me/posts/transformer/

源码:

https://github.com/Kyubyong/transforme

参考文献:

1.https://zhuanlan.zhihu.com/p/110800071

postion位置理解

2.https://www.zhihu.com/question/347678607/answer/864217252

3.https://zhuanlan.zhihu.com/p/110800071

4.https://zhuanlan.zhihu.com/p/63191028

5.https://charon.me/posts/transformer/

6.https://zhuanlan.zhihu.com/p/47510705

7.https://blog.csdn.net/qq_42004289/article/details/85990009

8.https://spaces.ac.cn/archives/4765

9.https://spaces.ac.cn/archives/6933

10.https://www.zhihu.com/question/347678607/answer/864217252

11.https://zhuanlan.zhihu.com/p/95079337

12.https://blog.csdn.net/u012526436/article/details/86295971

13.https://zhuanlan.zhihu.com/p/60821628